Dataflow How to Tell Which Machine Type Is Used

The movement of data in the system is known as data flow. For streaming jobs the default machine type for Streaming Engine-enabled jobs is.

Data Flow Diagrams Explained Biro Administrasi Registrasi Kemahasiswaan Dan Informasi

A dataflow is a simple data pipeline or a series of steps that can be developed by a developer or a business user.

. To execute your pipeline using Dataflow set the following pipeline options. For example you must specify the subnetwork parameter in the following situations. How To Get Started With Apache Beam and Spring Boot.

DFD describes the processes that are involved in a system to transfer data from the input to the file storage and reports generation. You must select a subnetwork in the same region as the zone where you run your Dataflow workers. Data flow node and its ISA representation 10.

Clicking Edit entites gives us. A block diagram node executes when it receives all required inputs. Data Flow Testing is a type of structural testing.

It is a method that is used to find the test paths of a program according to the locations of definitions and uses of variables in the program. According to Is it possible to use a Custom machine for Dataflow instances. The pipeline runner that executes your pipeline.

The most common data types are Integer String Arrays Boolean and Float. Once you set up all the options and authorize the shell with GCP Authorization all you need to tun the fat jar that we produced with the. When a node executes it produces output data and passes the data to the next node in the dataflow path.

Custom containers are supported for pipelines using Dataflow Runner v2. Static and dynamic dataflow machines. Specify a subnetwork using either a complete URL or an abbreviated path.

You can consider it similar to Power Query on the cloud. This name is the text that should appear on the diagram and be referenced in all descriptions using the data flow. The Cloud Dataflow Runner prints job status updates and console messages while it waits.

The source of the data flow. LabVIEW follows a dataflow model for running VIs. To cancel the job you can use the Dataflow Monitoring Interface or the Dataflow Command-line Interface.

Data Flow Nodes II. However if you refresh C then youll have to refresh others independently. To illustrate the approach of data flow testing.

The subnetwork you specify is in a custom mode network. Create a Data Flow activity with UI. Statements where these values are used or referenced.

Setting required options. You can define whether the dataflow is certified or promoted. In a data flow machine a program consists of data flow nodes.

When all inputs have tokens. Assign out a. Clicking the arrow next to each entity will show us the fields in the entity we have selected.

For batch jobs the default machine type is n1-standard-1. A data flow node fires fetched and executed when all its inputs are ready ie. To use a Data Flow activity in a pipeline complete the following steps.

Designs that use content-addressable memory CAM are called. Designs that use conventional memory addresses as data dependency tags are called static dataflow machines. Mapping Data Types in the Data Flow.

While the result is connected to the active job note that pressing CtrlC from the command line does not cancel your job. Also known as DFD Data flow diagrams are used to graphically represent the flow of data in a business information system. Selecting Off disables the compute engine and DirectQuery capability for this dataflow.

Connect Azure Data Lake Storage Gen2 for dataflow storage. While moving data from sources through transformations to destinations a data flow component must sometimes convert data types between the SQL Server Integration Services types defined in the DataType enumeration and the managed data types of the Microsoft NET Framework defined in the System namespace. When Dataflow launches worker VMs it uses Docker container images to launch containerized SDK processes on the workers.

A general description of the data flow. This page describes how to customize the runtime environment of user code in Dataflow pipelines by supplying a custom container image. Data flow diagrams can be divided into logical and physical.

The movement of data through the nodes determines the execution order of the VIs and functions on the block diagram. You can set the custom machine type for a dataflow operation by specifying the name as custom-- But that answer is for the Java Api and the old Dataflow version not the new Apache Beam implementation and Python. The source could be an external entity a process or a data flow coming from a data store.

Selecting Optimized means the engine is only used if there is a reference to this dataflow. If you have dataflows A B and C in a single workspace and chaining like A B C then if you refresh the source A the downstream entities also get refreshed. The destination of the data flow same items listed under the source.

It has nothing to do with data flow diagrams. Add data to a table in Dataverse by using Power Query. What is Data Flow Diagram.

The ID of your Google Cloud project. Add new entities takes us back to the dataflow Get Data window we saw above. It is represented with the help of arrow.

Importance of Data Flow Diagram. From the top menu we have. Every data type has data stored according to its specific type ie.

For example to describe an AND gate using dataflow the code will look something like this. The tail of the arrow is source and the head of the arrow is destination. Here we are going to look at some of the most common and used data types in LabView.

Integer can only store integer value and can only display integer type of data. Dataflow modeling describes hardware in terms of the flow of data from input to output. Using this pipeline data can be fetched into the Power BI service from a wide variety of sources.

Data flow diagram is a simple formalism to represent the flow of data in the system. These machines did not allow multiple instances of the same routines to be executed simultaneously because the simple tags could not differentiate between them. For Google Cloud execution this must be DataflowRunner.

Visit the Power Apps dataflow community forum and share what youre doing ask questions or submit new ideas. A small set of dataflow operators can be used to. Data Flow Nodes 11.

Select the new Data Flow activity on the canvas if it is not already selected and its Settings tab to edit its details. Search for Data Flow in the pipeline Activities pane and drag a Data Flow activity to the pipeline canvas. As most of the Power BI developers might be already aware of data.

You will now see the 3 entities in our dataflow. Visit the Power Apps dataflow community and share what youre doing ask questions or submit new ideas.

How To Use Google Cloud Dataflow With Tensorflow For Batch Predictive Analysis Google Cloud Big Data And Machine Learning Machine Learning Models Predictions

How To Use Dataflow To Make The Refresh Of Power Bi Solution Faster Radacad Power Solutions Data Science

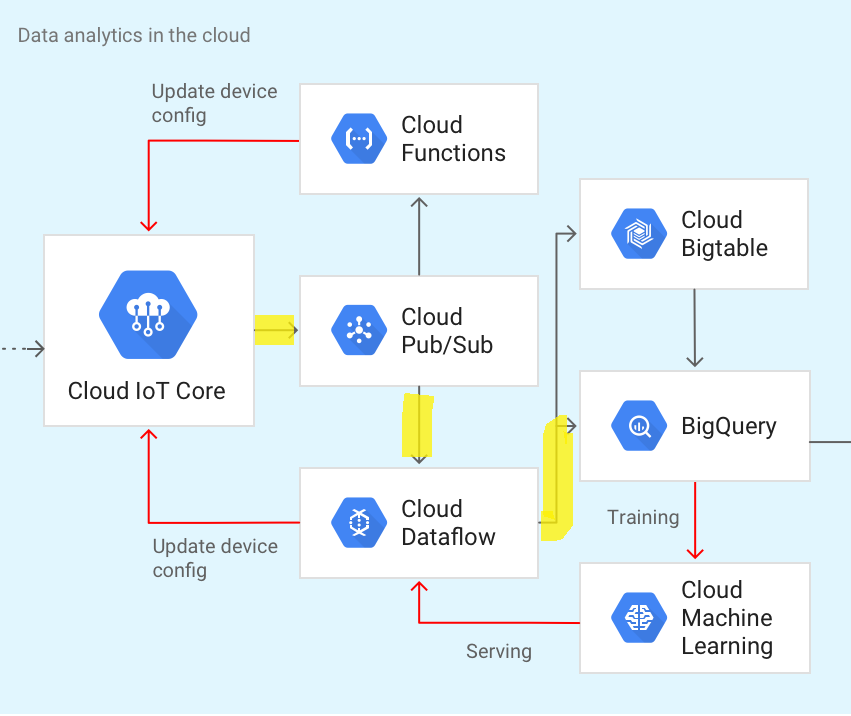

How To Create Dataflow Iot Pipeline Google Cloud Platform By Huzaifa Kapasi Towards Data Science

Comments

Post a Comment